activation function

tags:

no_tags

categories:

uncategorized

- References :

- Reading:

- Tom Mitchell Lectures slides of chapter 4

- Tom Mitchell, Machine Learning Chapter 4

- Reading:

- Questions :

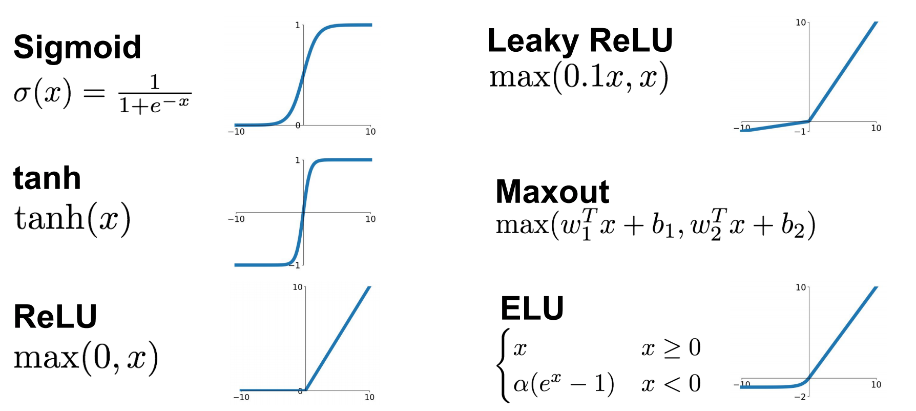

- Also known as squashing Function

Figure 1: Activation Functions, from this post

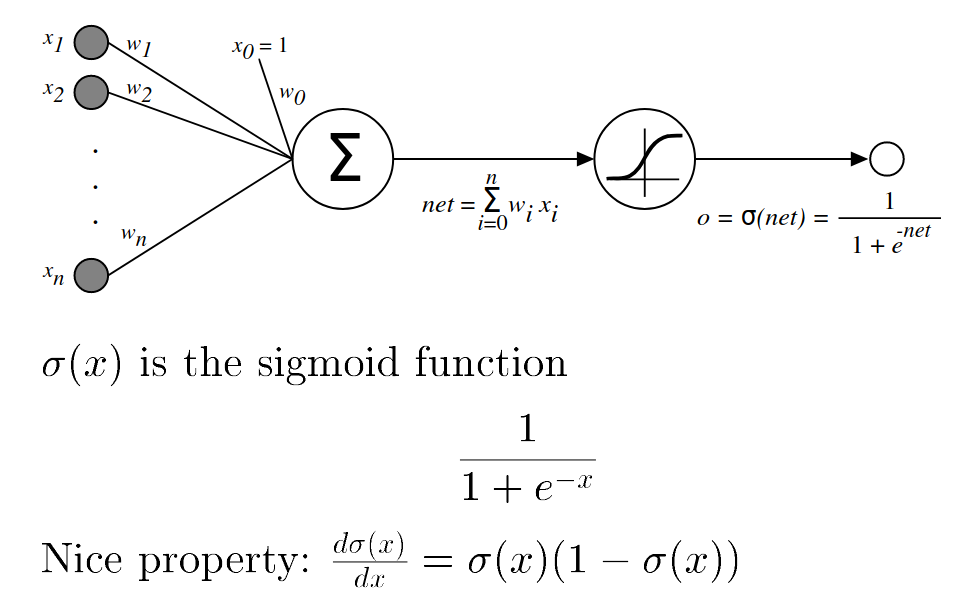

Sigmoid

- output is a non linear function of its inputs and is differentiable threshold function

- also called logistic function

- output ranges from 0 to 1

Figure 2: The sigmoid threshold unit, from Tom Mitchell Lectures

Tanh

\(f(x) = tanh(x) = \frac{(e^{x} - e^{-x})} {(e^{x} + e^{-x})}\)

\(f’(x) = 1 - (tanh(x))^{2}\)