Recurrent Neural Networks

- Acknowledgement

https://d2l.ai/chapter%5Frecurrent-neural-networks/index.html

https://github.com/fastai/fastbook/blob/master/12%5Fnlp%5Fdive.ipynb

https://stanford.edu/~shervine/teaching/cs-230/cheatsheet-recurrent-neural-networks

https://colah.github.io/posts/2015-08-Understanding-LSTMs/ [Mainly LSTM]

https://deeplearning.cs.cmu.edu/S20/document/recitation/recitation-7.pdf

http://cs231n.stanford.edu/slides/2017/cs231n%5F2017%5Flecture10.pdf

https://github.com/fastai/fastbook/blob/master/12%5Fnlp%5Fdive.ipynb [ fast ai nlp dive rnn archi]

http://ethen8181.github.io/machine-learning/deep%5Flearning/rnn/1%5Fpytorch%5Frnn.html#Recurrent-Neural-Network-(RNN) [Main reference]

https://medium.com/ecovisioneth/building-deep-multi-layer-recurrent-neural-networks-with-star-cell-2f01acdb73a7 [Multi Layer]

https://towardsdatascience.com/pytorch-basics-how-to-train-your-neural-net-intro-to-rnn-cb6ebc594677

https://www.jeremyjordan.me/introduction-to-recurrent-neural-networks/

https://towardsdatascience.com/pytorch-basics-how-to-train-your-neural-net-intro-to-rnn-cb6ebc594677

Sebastian Raschka Character Generation using lstm cell pytorch

Recurrent neural networks (RNNs) are designed to better handle sequential information. (text and stocks)

RNNs introduce state variables(hidden state) to store past information, together with the current inputs, to determine the current outputs.

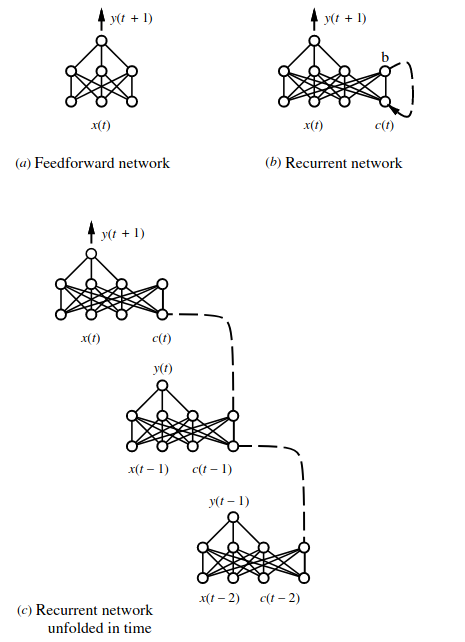

Figure 1: rnn vs nn

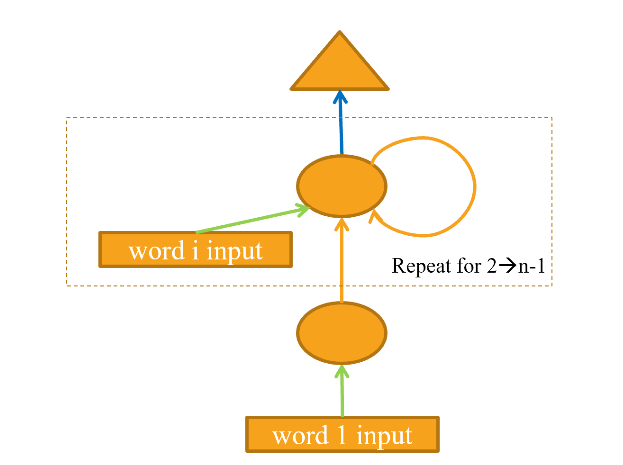

Figure 2: a folded rnn

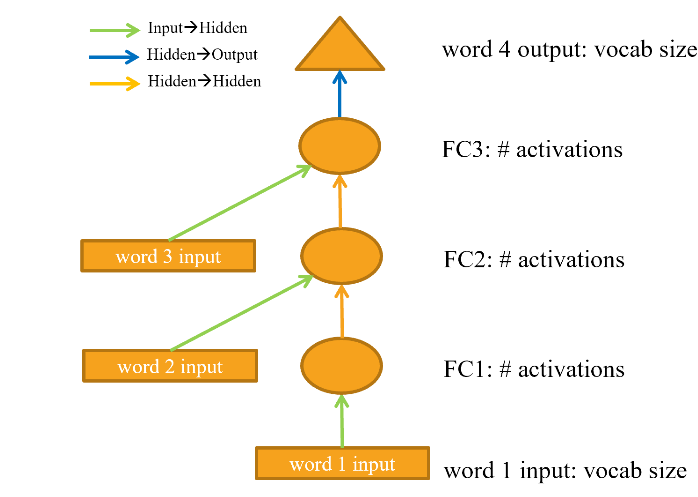

Figure 3: a unfolded rnn

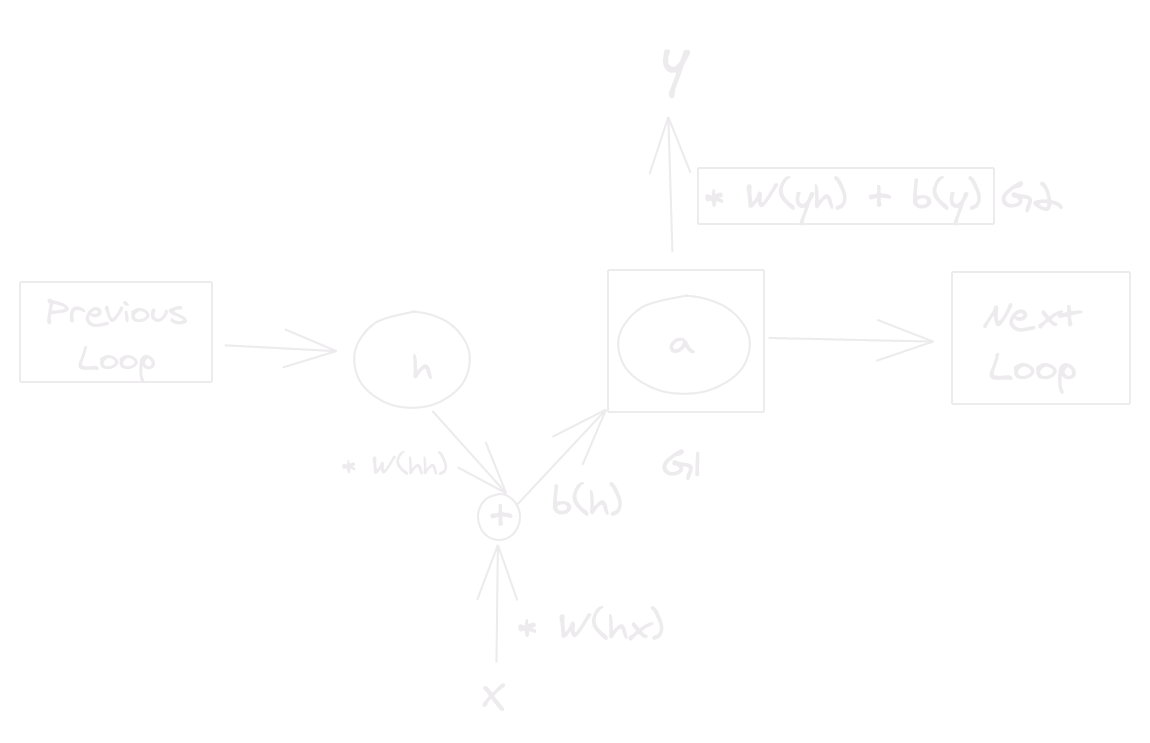

- Internal State of RNN

Figure 4: a state diagram,