ML Model Evaluation

categories:

Machine Learning

- references

- questions

ml model evaluation methods

Classfication

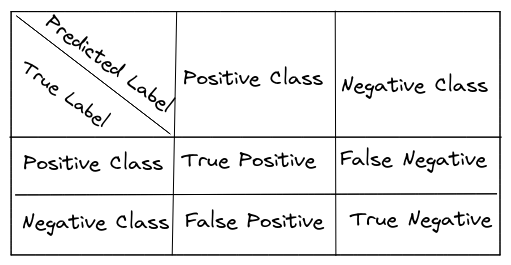

True Positive (TP)

- Positive class correctly labeled/predicted

False Negative (FN)

- Positive class incorrectly labeled/predicted

False Positive (FP)

- Negative class incorrectly labeled/predicted

True Negative (TN)

- Negative class correctly labeled/predicted

Accuracy

- It is simply a ratio of correctly predicted observation to the total observations.

- Accuracy = \(\frac{TP + TN}{TP + TN + FN + FP}\)

Precision

- Precision = \(\frac{True Positive}{True Positive + False Positive}\)

- From all the postive prediction given by our hypothesis/model how many examples were true positive

Recall

- Recall = \(\frac{True Positive}{True Positive + False Negative}\)

- From all the postive examples how many examples were correctly classified by our hypothesis/model

F1 Score

A harmonic mean between recall and precision

- Why ?

- Tries to give the lowest value between recall and precision

- biased to the lowest value

- Balances recall and precision

- Why ?

F1 Score = \(\frac{2}{\frac{1}{Precision} + \frac{1}{Recall}}\)

From Wikipedia

- In information retrieval and machine learning, the harmonic mean of the precision and the recall is often used as an aggregated performance score for the evaluation of algorithms and systems: the F-score (or F-measure). This is used in information retrieval because only the positive class is of relevance, while number of negatives, in general, is large and unknown.[14] It is thus a trade-off as to whether the correct positive predictions should be measured in relation to the number of predicted positives or the number of real positives, so it is measured versus a putative number of positives that is an arithmetic mean of the two possible denominators.

Dice Score

It is F1 Score

Dice Score = \(\frac{2 * Intersection}{Union + Intersection}\)

= \(\frac{2*TP}{2*TP + FP + FN}\)

For Image Segmentaion evaluation

Confusion Matrix

- The scikit learn confusion matrix representation will be a bit different, as scikit learn considers

- the actual target classes as columns

- the predicted classes as rows,

Classfication Report

- It shows a representation of the main classification metrics on a per-class basis.

- The classification report displays the precision, recall, F1, and support scores for the model.

- These metrics are defined in terms of true and false positives, and true and false negatives.